What’s happening in the world of AI:

Highlights

Cognitive Friction: How to Use AI Without Outsourcing Your Brain

I was building a robot the other day. Nothing fancy, just a basic little thing so I could learn the principles of robotics. The brain of the robot is a Raspberry Pi, a tiny mini computer, and I was trying to get it to talk to the robot body. The hardware and the software were not connecting.

I spent ages going back and forward. Writing out code, checking documentation, looking for spelling mistakes in my commands, reading error messages.

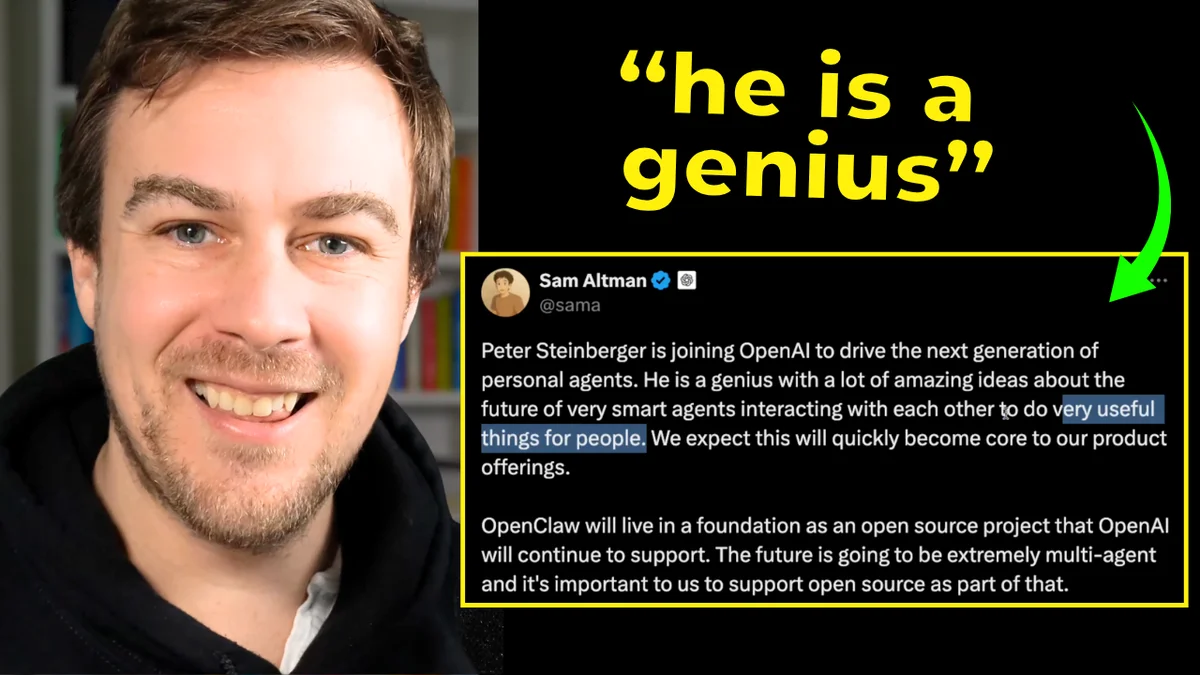

And then eventually I thought, what am I doing? I plugged the Raspberry Pi directly into Claude Code via USB and said: here's what I'm building, here's what I've done, here are the errors I'm getting. What's gone wrong?

Claude Code rewrote what it needed to, got through all the bugs, and when I plugged it back into the robot, it worked immediately. I got my result: a little robot that drives around and does its thing.

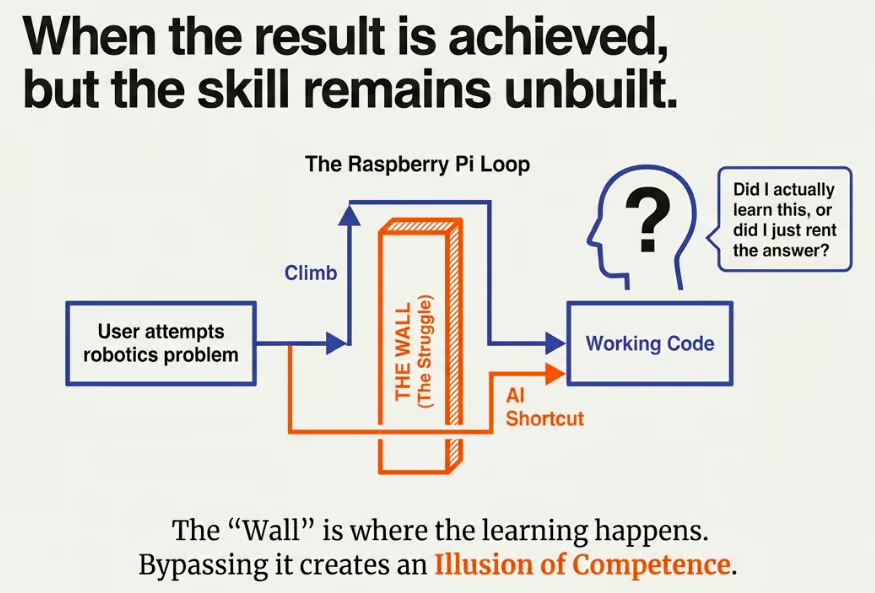

But I skipped the learning.

I skipped the tiresome (admittedly tiresome) process of hitting a wall, getting stuck, working out how to get past that wall, and therefore learning how to get past it next time. Instead, I went around it entirely. AI solved the problem and I got to the end result much quicker. But the knowledge gap is still there.

This morning, everyone in my WhatsApp group was talking about exactly this. It led me to tackle this as a topic.

worried about their kids using AI for homework. But it's not just a children's issue. It's all of us. We now have the option to entirely skip the hard part of learning, and the hard part is where all the learning actually happens.

The Hidden Trade-Off of Instant Answers

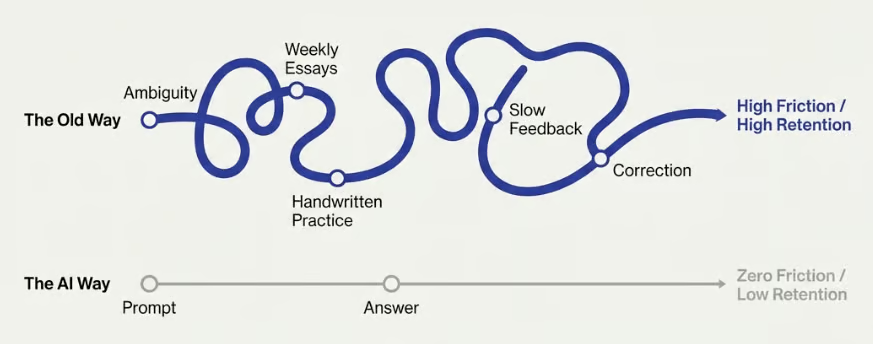

When I went to Oxford to study history, my tutor Matthew handed me a sheet of A4 paper on the first day. An essay title, a reading list, and the instruction: off you go, I'll see you in a week.

I had to go to the Bodleian Library, get the books, read them (or realise I couldn't read all of them in a week and prioritise), analyse the information, synthesise it into an argument, and write the essay. Sometimes two essays a week. For three years.

Now to be fair it’s a lovely library!

You'd run into dead ends. You'd have an argument

in your head all week that would suddenly collapse. You'd have to rewrite everything. You'd only see your teacher once a week for limited feedback. It was hard work, hard grind, and a fantastic education.

Now imagine you're a first-year student, given the same essay title and reading list. It's your first week. You want to go out and get drunk and go to parties. AI knows all these books, or you can feed in the PDFs. You can skip the entire process and get a polished essay in minutes. Of course students are going to do this. They're 19. They have other priorities.

The problem is that the process I went through — the frustration, the dead ends, the rewriting — that was the education. The essay was just the proof that learning had occurred. Remove the process and you get the proof without the learning.

This creates what I'm calling the illusion of competence. You can put out an article, a document, a slide deck that looks and feels very competent. In front of other people, you seem like you know what you're talking about. But you haven't done any of the cognitive work in the background.

And you will get caught out.

For students: probably in their handwritten exams when suddenly ChatGPT feels very far away.

For adults: when we actually have to put our learning into action and realise we have no idea what to do.

AI Is Not Just a Calculator for Words

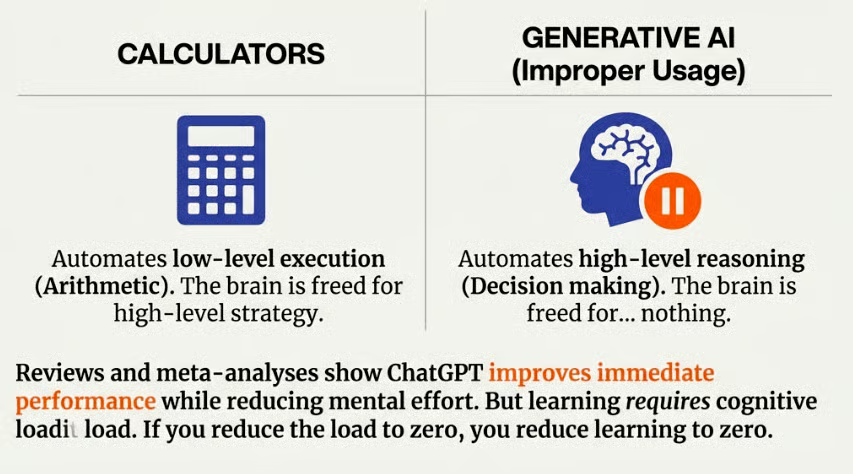

I keep seeing the argument that AI is just a tool, like a calculator. No. With a calculator, you still need to know what numbers to plug in and in what order. You need a conceptual understanding of the process. The calculator automates low-level execution (arithmetic) and frees your brain for high-level strategy.

Generative AI is fundamentally different. You can go to ChatGPT and work through complex problems in domains you have zero personal knowledge of, and get decent answers. You might not have the skill or training to tell whether the answer is good, but you'll get one. And it’ll sound pretty damn convincing.

The calculator is useless without knowledge. AI is useful without it .. and that's exactly what makes it dangerous for learning.

A calculator automates execution. Generative AI, used improperly, automates reasoning and cognition. The brain is freed for... well, nothing. Reviews and meta-analyses show ChatGPT improves immediate performance while reducing mental effort. But learning requires cognitive load. Reduce the load to zero and you reduce learning to zero. Oops.

How Your Brain Actually Learns

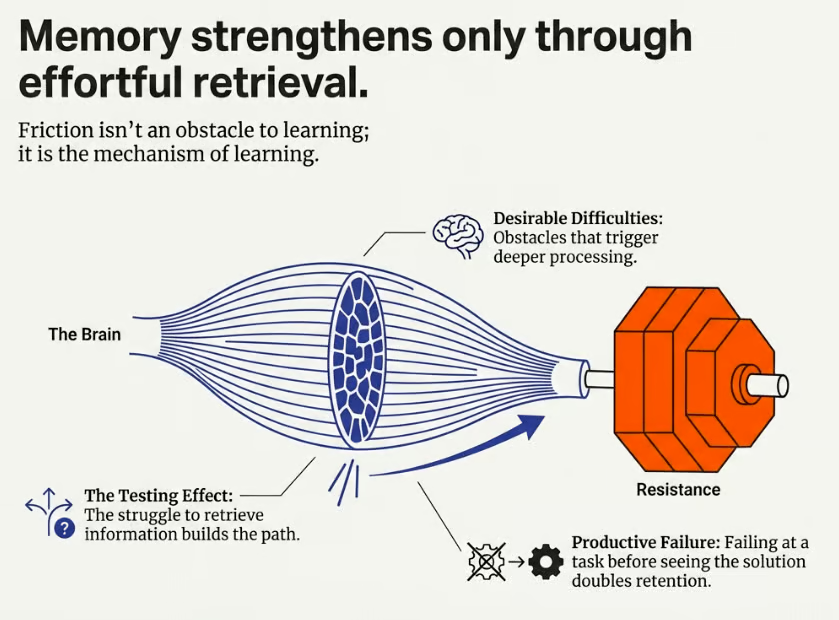

Your brain is like a muscle. It’s a crude but handy analogy.

You strengthen muscles by exhausting them and letting them regrow bigger. The brain needs similar exertion via recall.

Learning is about making a mistake, realising you've made a mistake, course correcting, and then not making that mistake again.

You have to fail in order to learn. By definition you cannot learn without failure.

It's like growing a muscle: you need repetition over time. You're going to atrophy and then grow back stronger. Your brain works similarly. It requires effortful work, productive failure, to get you to the point where you can do something with ease.

I love learning languages. I speak Chinese, and I'm learning Greek at the moment (I'm about to move to Cyprus). Learning a language is entirely about this process: trying to learn a word, getting it wrong, being corrected, saying it correctly next time. Again and again.

That's why just doing Duolingo vocabulary exercises isn't really language learning. A lot of people use Duolingo, go to the country, try to speak, and realise they can't actually communicate. Because they never went through the messy, embarrassing process of talking to real people, getting it wrong, and being corrected.

That difficulty is the mechanism of learning, not an obstacle to it. It has to be hard to work.

Six Principles for Learning With AI

OK! Let’s talk solutions.

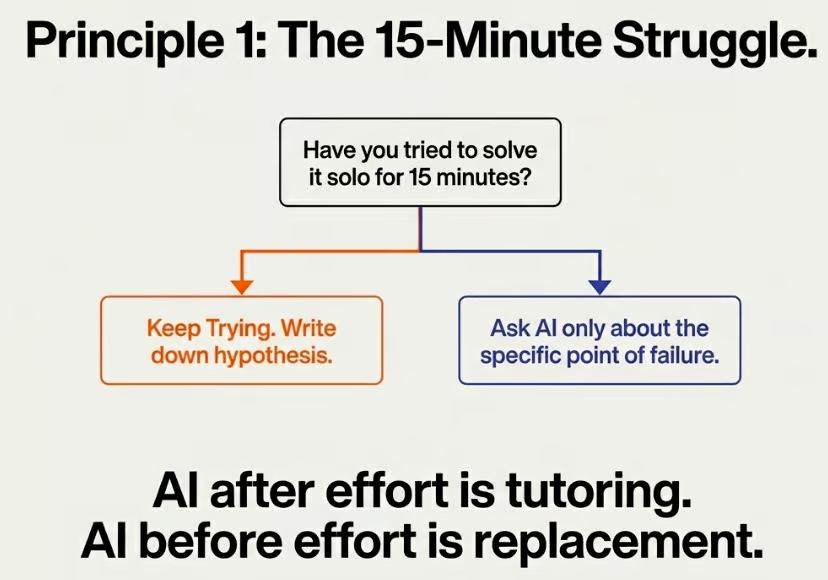

Principle 1: The 15-Minute Struggle. Before reaching for AI, spend 10-15 minutes trying to solve the problem yourself. This is different from my usual advice of "try AI first for everything" — that's good when you're learning what AI can and can't do. Once you have that grasp, push back. Try it manually first. If you can't crack it after 15 minutes, then go to AI, but go with a specific question about where you got stuck, not a request to do the whole thing.

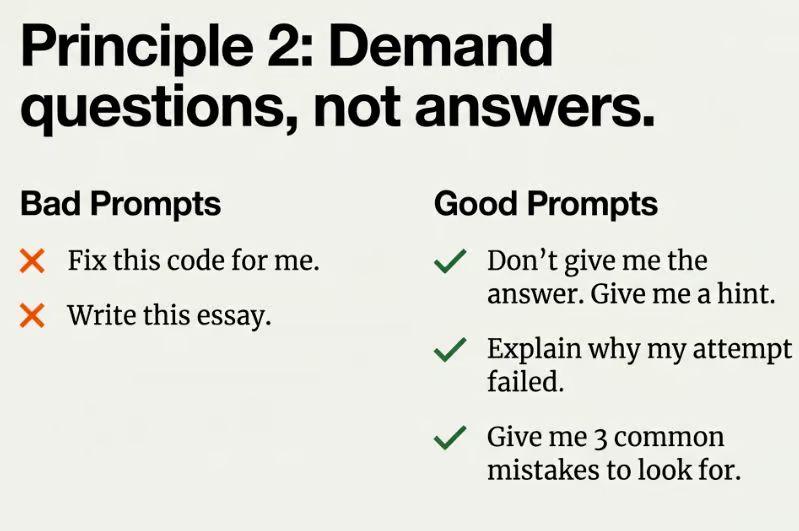

Principle 2: Demand questions, not answers. Don't say "fix this code for me" or "write this essay." Instead: "Don't give me the answer, give me a hint." Or: "Explain why my attempt failed." Or: "Give me three common mistakes to look for."

ChatGPT has actually built this in with Study Mode (under Plus → More → Study and Learn). It won't immediately give you answers but will have a conversation and ask you questions, working like a tutor. Salman Khan's book "Brave New Words" covers this brilliantly, especially if you have children.

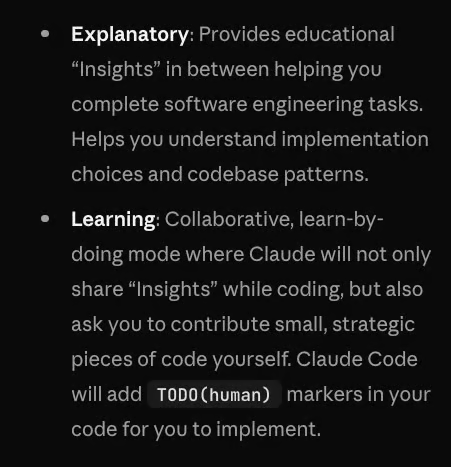

Fun fact, Claude Code also has a learning mode which allows you to learn code whilst coding:

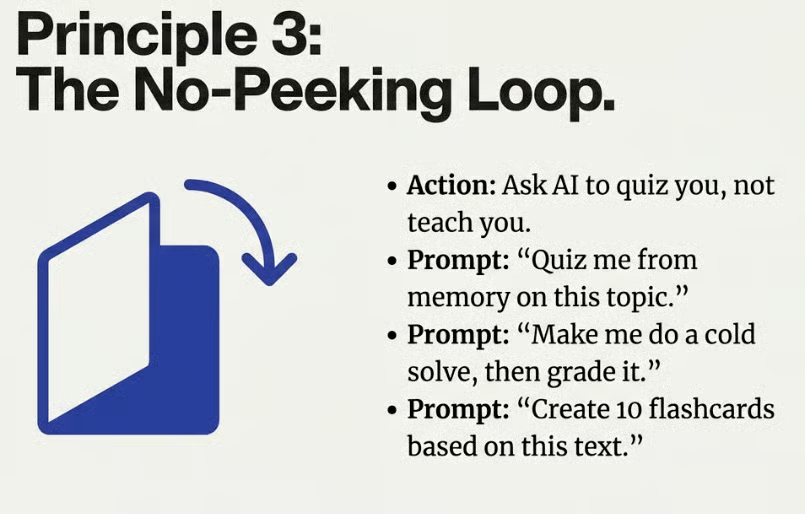

Principle 3: The No-Peeking Loop. Ask AI to quiz you, not teach you. Use prompts like: "Quiz me from memory on this topic," "Make me do a cold solve, then grade it," or "Create 10 flashcards based on this text."

FYI: NotebookLM is brilliant for this — you can feed it sources and it generates quizzes automatically. It’s also free. Yay.

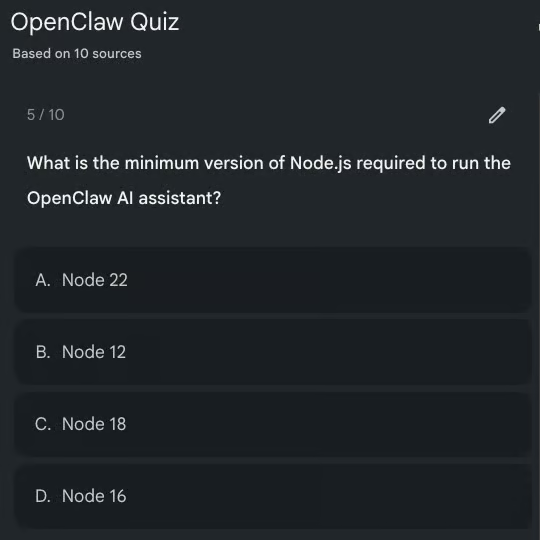

I did a live quiz during the stream on Open Claw and it was genuinely fun (and I got caught out on a few questions, which is exactly the point).

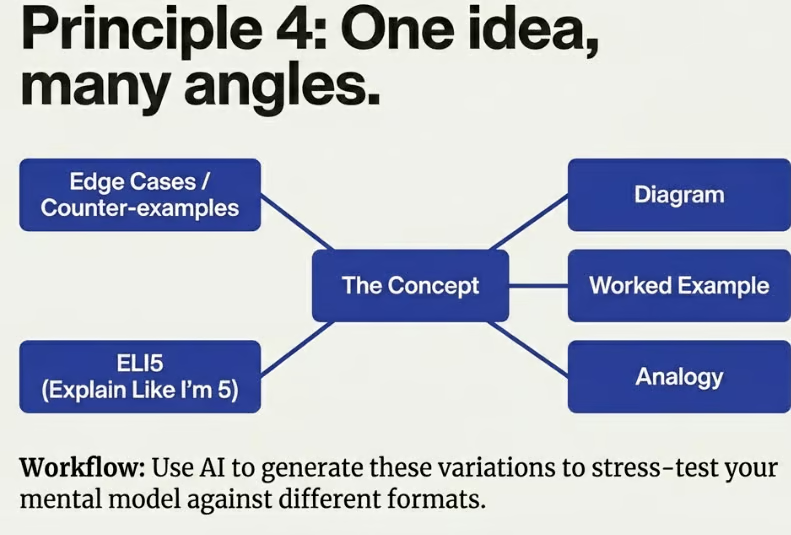

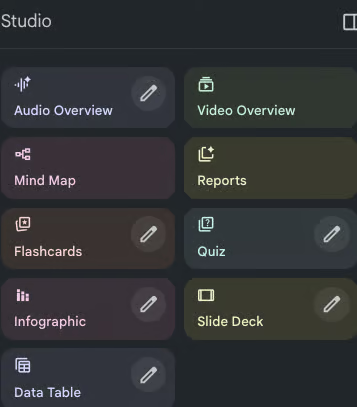

Principle 4: One idea, many angles. We all learn differently — some people are visual, some auditory, some tactile. AI lets you convert any material into any format. NotebookLM can take a book or article and generate podcasts (with a feature where you can actually join the conversation), video explainers, mind maps, infographics, flashcards, and quizzes. Hit the same topic from multiple modalities to reinforce your understanding. I personally find audio learning too slow, but you might love it. The point is to use AI to flesh out learning rather than shortcut it.

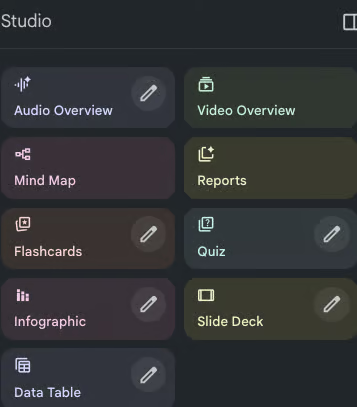

In NotebookLM we can generate all of these and more:

The current NotebookLM outputs

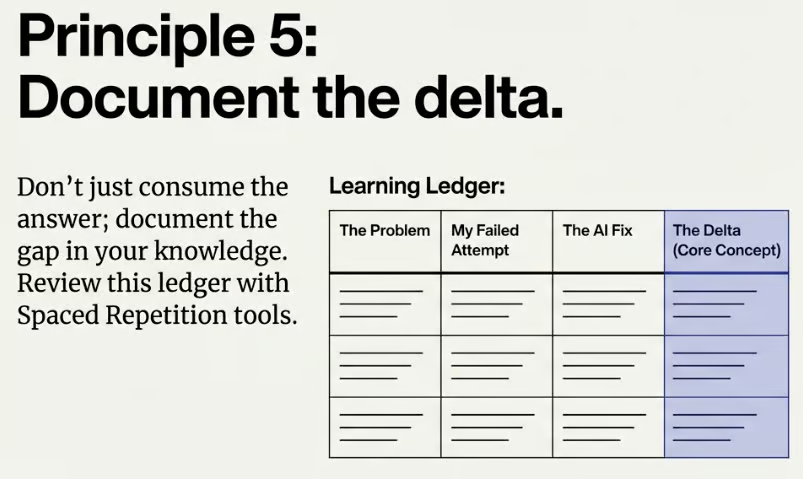

Principle 5: Document the delta. When AI fixes something for you, don't just consume the answer. Document the gap: what was the problem, what did you try, what did the AI fix, and what's the core concept you were missing?

This is obviously a lot more active. And probably only for actual students. But powerful if applied.

Keep a learning ledger and review it with spaced repetition. This turns a passive "AI fixed it" moment into an active learning event. You can do this with OpenClaw btw…super powerful usage.

Principle 6: Strategic Disconnection. Read long-form books. Books-level-boring books. Gross. Dull. Ick.

Schedule sessions with zero AI access. Re-train your boredom tolerance.

This isn’t me being a Luddite. It's about ensuring you remain the pilot even when the autopilot is off.

Want to go the extra mile? The Feynman method is powerful here: choose a concept, teach it to yourself or someone else, return to the source material if you get stuck, simplify your explanations and create analogies. If you can teach something, you've mastered it. (Guess why I spend so much time teaching? Bingo.)

A lot to take in here. And this will be a moving target for sure. Especially as AI gets smarter and smarter.

But….importantly: AI isn't the problem. Our unconscious usage is. We choose how to use it. For now at least! So use it wisely to better yourself rather than to remove yourself.

"Can you use AI to simulate the learning process?"

You could build a brilliant one-to-one tutor using something like OpenClaw. Spin up a specific agent: "You are my AI engineering advisor. Every day I want you to deliver some learning to me. Find something in the news, explain it, link it back to what you've already taught me, test me, proactively reach out and ask me questions."

That would be a very cool product. Or just a cool side project. Either!

But it still requires you to be part of the process. The danger is people offloading the cognitive load entirely because doing the work is hard.

Streaming on YouTube (with full 4k screen share) and TikTok (follow and turn on notifications for Live Notification).