AI with Kyle Daily Update 127

Today in AI: Dario and Demis talk AGI

Watch this video on our dedicated watch page

Better viewing experience with related videos and full-screen player

What’s happening in the world of AI:

Highlights

The skinny on what's happening in AI - straight from the previous live session:

🎯 The Day After AGI: Two AI Leaders at Davos

The World Economic Forum brought together Dario Amodei (Anthropic) and Demis Hassabis (Google DeepMind) for a half-hour discussion called "The Day After AGI." These are arguably the two people building the best AI models in the world right now. Worth watching in full.

Here's what they said in short:

⏰ AGI Timelines: When Is It Coming?

Both believe AGI is possible. That's the headline.

Dario Amodei (Anthropic): Aggressive timeline. Predicts models at Nobel laureate levels by 2026-2027. That's this year or next. He believes timelines will be shorter than most expect because of self-improving loops - AI can now code and help improve AI. Claude Code is doing exactly this inside Anthropic.

Demis Hassabis (Google DeepMind): More cautious but still aggressive. 50/50 chance of AGI by the end of the decade. Notes we're still missing some capabilities for scientific creativity. Self-improving loops are critical, but they'll be slower in domains requiring physical experimentation or lacking easy verification.

Remember: these are CEOs of labs working towards AGI. They're not going to say it's impossible or 50 years away. But even the conservative estimate - 50/50 by 2030 - is extraordinary in historical terms. That’s the big takeaway.

💼 Labour Market Impact

Dario: Imminent and overwhelming. Predicts half of entry-level white-collar jobs could be gone in one to five years. Says it's already happening - entry-level hiring is slowing because companies can do more with AI.

Demis: Agrees on near-term disruption. Expects normal tech disruption for five years, with new jobs created afterwards. Post-AGI is uncharted territory.

Both agree: jobs will be incredibly disrupted for the next five years, probably longer. This is why I keep banging the drum about diversifying income streams. Don't rely on one pillar of income. Start a side project, freelance, build an app, do workshops - something so you have different sources of income when disruption hits.

We don’t know the exact shape disruption will take. But we do know that having ONE stream of income is note going to be as stable as multiple.

🌏 China and Geopolitics

Dario: "We need to immediately stop selling chips to China." Selling GPUs for AI training is akin to selling nuclear weapons. Don’t do it!

Demis: More moderate. Needs international cooperation. If US and China aren't talking, they're in an arms race where safety standards slip. Better if both countries collaborate on developing AI safely.

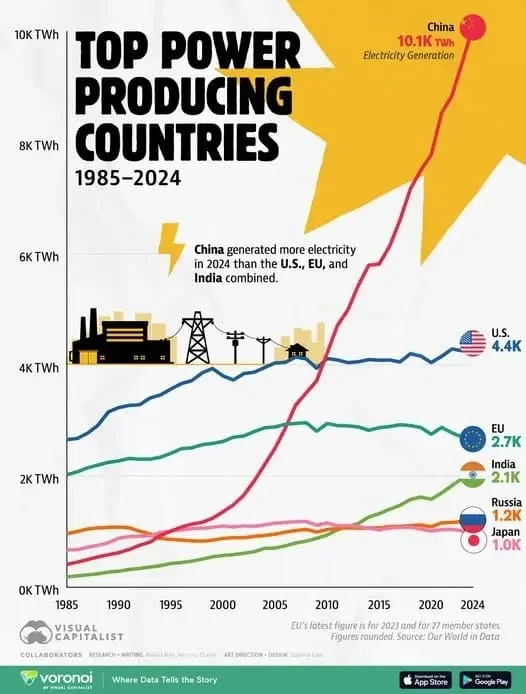

Interestingly Trump also spoke at Davos. He got something right: the race is now about energy, not who has the best AI model. That's the next bottleneck. China has been sustainably investing in energy production for decades now. Recently built a solar installation the size of Bristol. If energy is the bottleneck, China is well ahead.

One of my favourite charts!

🛑 On "Slowing Down"

Some AI safety groups are reading this discussion as both CEOs agreeing to slow down.

I think this is slightly wishful thinking.

They can say whatever they want about slowing down amongst themselves. OpenAI, xAI, Anthropic, Google - even if all US labs agreed to slow down, it doesn't matter if China doesn't. And China won't. And even if the US labs try to slow down the US government would probably tell them they're not allowed to slow down anyway.

These are media-trained CEOs. They know what they're saying. And reading this as a general call for a slow-down is very much cherry picking to match a narrative.

📜 Claude's New Constitution

Anthropic released a reworked constitution for Claude. I'll link it below, but honestly... it feels like a PR stunt.

Anthropic are very good at the safety stuff - systems cards, ethics papers, they even have a chief philosopher. But then you have Dario Amodei on stage basically saying "we need to go go go, beat China, it's gonna be messy" while his PR team diligently puts out documents about being "broadly safe, broadly ethical, compliant with Anthropic's guidelines."

The head of the company is going full steam ahead to build AGI as quickly as possible. And explicitly saying it’s going to be messy. If this constitution or the head philosopher get in the way, I don't think they'll have a say.

Source: Claude's new constitution | Full constitution

📚 Google's Education Push

Whilst everyone is at Davod, Google quietly released two education tools:

SAT Practice Exams - Full-length, on-demand practice exams inside Gemini, partnered with Princeton Review. Immediate feedback on where you excelled and where you need work. Free. This probably killed a hundred test prep startups overnight…

Khan Academy Writing Coach - Partnered with Sal Khan (founder of the amazing Khan Academy and author of "Brave New Words" which is about AI in education). The tool doesn't generate answers - it walks students through outlining, drafting, and refining their own ideas.

Interesting shift: Sal Khan originally worked with OpenAI back in the GPT-4 days. Now he's working with Google. I'd love to know what happened there!

These tools address the AI cheating problem. Instead of "write me an essay about Henry VIII's wives," students get guided through actually learning. Of course, it's still up to kids, teachers, and parents to use AI this way rather than just asking it to do their homework!

🔧 Claude Code Goes Mainstream

Claude Code is breaking into the mainstream! Quite right! The Wall Street Journal ran an article: "Claude Has Taken the AI World by Storm and Even Non-Nerds Are Blown Away."

They are catching up with us Nerds apparently…

They call it "getting Claude-pilled" - the moment software engineers, executives, and investors turn their work over to Claude and witness "a thinking machine of shocking capability."

Jensen Huang (NVIDIA CEO) at Davos: "Claude is incredible. Anthropic made a huge leap in coding and reasoning. Nvidia uses it all over. Every software company needs to use it."

For me, Claude Code is the closest feeling I've had to first using ChatGPT. I've only had that sensation a few times: ChatGPT's public release, Nano Banana Pro, and Claude Code. Those have been the watershed moments..

Ways to access Claude Code:

Claude Co-work (needs Pro/Max plan, Mac desktop app)

Claude Code tab in Claude itself

VS Code extension (free)

Cursor with Claude Code installed

Terminal (if you're comfortable with command line)

If you're stuck, just ask Claude or ChatGPT: "I've heard about this Claude Code thing. How do I set it up? I don't know what I'm doing." It'll walk you through.

Source: WSJ Article

Member Question: "Which is better - Claude Code with Co-work or GitHub Copilot Pro?"

Kyle's response: Claude Code. Easy one. GitHub Copilot is fine if you just want code completion. But Claude Code is a proper AI coding agent - it can do a lot more. It's not even close.

Want the full unfiltered discussion? Join me tomorrow for the daily AI news live stream where we dig into the stories and you can ask questions directly.

Love AI with Kyle?

Make us a Preferred Source on Google and catch more of our coverage in your feeds.