AI with Kyle Daily Update 102

Today in AI: OpenAI CodeRed + DeekSeek V3.2

Watch this video on our dedicated watch page

Better viewing experience with related videos and full-screen player

The skinny on what's happening in AI - straight from the previous live session:

🚨 OpenAI Declares Code Red: Gemini Downloads Explode, China Cuts Prices 20x

Sam Altman declared Code Red, delays ads, demands improvements. Gemini app installs rocketing, Chinese models 20x cheaper. The monopoly is over.

Kyle's take: OpenAI just declared Code Red, in a leaked internal memo. Altman told employees they're delaying ads (which I predicted yesterday) to focus on improving ChatGPT. Why? They're being squeezed from both sides.

Gemini app downloads exploded (see chart) - that spike is Nano Banana going viral, first time Google's been relevant in two years.

Meanwhile, Chinese models like DeepSeek are 10-20x cheaper and/or free if you local host. San Francisco startups already switching because "good enough" at 1/20th the price beats "best" every time.

OpenAI had two years of freedom, ChatGPT became synonymous with AI. Now? People in my chat today asking "should I use Gemini or ChatGPT?" That wasn't even a question a month ago. Nobody cared about Google.

OpenAI will likely need to ship something impressive before Xmas to regain their dominance.

Source: Reuters coverage

🇨🇳 DeepSeek V3.2: China's Open Source Attack on America's AI Business Model

685 billion parameters, MIT license, 20x cheaper than GPT. Or, you know, free if you self-host. China's giving away what America charges for.

Kyle's take: DeepSeek V3.2 just dropped - 685B parameters, fully open source under MIT license (though they forgot to upload it on Hugging Face, whoops).

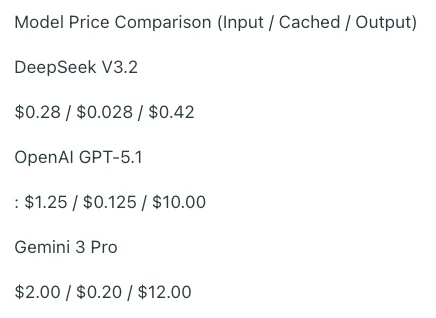

Not as good as Gemini 3 or Claude Opus but that does not matter. It’s cheap as hell:

Input 14 cents per million tokens vs ChatGPT's $2.50. That's 18x cheaper. San Francisco startups already reportedly switching because, let’s be honest, most tasks don't need state-of-the-art. Sorting data into buckets? GPT-3.5 from two years ago works fine. Why pay for GPT-5.1?

This is strategic warfare - China knows exactly what they're doing. Release free open-source or very cheap API models that are "pretty damn good but not SOTA" will destroy the closed-source American business model of charging for access.

Right now the entire US AI investment thesis (and a good portion of the Western economy….) assumes people will pay premium for best. But if Chinese models are 90% as good at 5% the price? Then that whole thesis starts looking shaky.

💻 Running Local Models: Fun Hobby, Terrible Business Decision

PewDiePie spent $20k on GPUs, still struggles. Companies spending millions on local models obsolete in months.

Kyle's take: Everyone asks about local models. Reality check: DeepSeek V3 is 685 BILLION parameters. PewDiePie built $20,000 GPU rig - still struggles with inference, training takes months. One H200 GPU costs £30,000. Colossus datacenter has 100,000 H100s and 50,000 H200s. That's why they need billions. I know companies who spent hundreds of thousands on local models, took months to deploy, then Claude/Gemini released something making it obsolete. You're competing against people spending BILLIONS. Your million-dollar setup is a joke. For phones: try "Locally" app (iOS), download Qwen models (1-2GB). For desktop: LM Studio. But these small models are nowhere near ChatGPT quality. Fun hobby, learn deployment, but practical daily use? Increasingly impossible as models get bigger. The calculus doesn't work unless you REALLY need that privacy.

Source: PewDiePie's video, GPU pricing

Member Questions:

Kyle's response: DeepSeek V3.2 not as good as OpenAI, Claude, or Gemini 3. But for most things? Doesn't matter. Building software doesn't always need the top model.

There’s no need for 5.1 Thinking or Opus 4.5 for basic tasks. And it just adds cost and slow response times. The complexity of most tasks hasn't increased - models from two years ago still handle them. So there’s no need to use the SOTA 80% of the time. This means models like Deepseek 3.2 coming in just under the frontier models doesn’t matter that much. Not when it’s 20x cheaper!

Kyle's response: Doubt it. Google has Google Images - decades of catalogued, sorted, labeled images. That's training data for Nano Banana. ChatGPT can scrape but Google makes it hard.

Meta has Instagram/Facebook but that's people, dogs, food - fairly limited pool. Google's data advantage is insurmountable, they’ve been building up their data catalogue for decades.

Kyle's response: Would've said GPT until yesterday. Four-part research task - completed part 1, said "do part 2," it did part 1 again. Three times. Context window issues, incredibly frustrating. Won't use GPT for deep research anymore.

Claude Opus 4.5 goes 20-30 minutes, pulls 800+ sources and absolutely knocks it out of the park on complex research tasks. Yesterday’s task gave me 821 sources in 17 minutes, another 642 in 20. Just walls of research.

Between GPT and Gemini? Gemini, because GPT's been annoying me lately. But I’d still go for Claude overall!

Kyle's response: Option three: Perplexity Pro FREE with PayPal here (not affiliate). Gives access to GPT 5.1, Claude Sonnet 4.5, Gemini 3 Pro, Grok 4. Missing bells and whistles - no ChatGPT Canvas, no Claude Projects and the other tools. But all models in one place.

Want the full unfiltered discussion? Join me tomorrow for the daily AI news live stream where we dig into the stories and you can ask questions directly.

Love AI with Kyle?

Make us a Preferred Source on Google and catch more of our coverage in your feeds.